Introduction

Today while researching approaches for quick Hand mapping using contours I came across a fantastic example in the form of a simple API by a young man named Vasu Agrawal. Not only was his code a wealth of interesting examples but he also included some fantastic documentation that he kept whilst working on his Gesture tracking project at Carnegie Mellon University.

My goal tonight is to utilize his API and explore how he has overcome some of the problems I’ve been faced with when implementing some of my image processing methods from the previous posts.

Converting from OpenCV2.x to 3.x

One of the first problems I needed to overcome was relating to the version of OpenCV Vasu had used when working on this project a few years back. I’m targetting OpenCV version 3+ whilst his API was written for OpenCV2.x.

The first issue in the code came from Lines 15 and 16 in GesturesApi.py

self.cap.set(cv2.cv.CV_CAP_PROP_FRAME_WIDTH, self.cameraWidth)

self.cap.set(cv2.cv.CV_CAP_PROP_FRAME_HEIGHT, self.cameraHeight)

Both cv.CV_CAP_PROP_FRAME_WIDTH and HEIGHT respectively are not valid variables in the new version of OpenCV. To correct this problem I referenced the API docs for the VideoCapture.set() function and found that I simply needed to make the following modifications to update support for OpenCV3.x

self.cap.set(cv2.CAP_PROP_FRAME_WIDTH, self.cameraWidth)

self.cap.set(cv2.CAP_PROP_FRAME_HEIGHT, self.cameraHeight)

The second and last issue was with the findContours() method in GesturesApi.py on lines 118 through 120.

self.contours, _ = cv2.findContours(self.thresholded.copy(),

cv2.RETR_TREE,

cv2.CHAIN_APPROX_SIMPLE)

Interestingly enough this was an issue I’d already dealt with previously so I knew the fix was to ammend a _ before the self.contours return variable. This is because in OpenCV2.x findContours() would only return two variables whereas now in OpenCV3.x we are returned the original input image along with the contours and hierarchy

_, self.contours, _ = cv2.findContours(self.thresholded.copy(),

cv2.RETR_TREE,

cv2.CHAIN_APPROX_SIMPLE)

Testing bundled Example

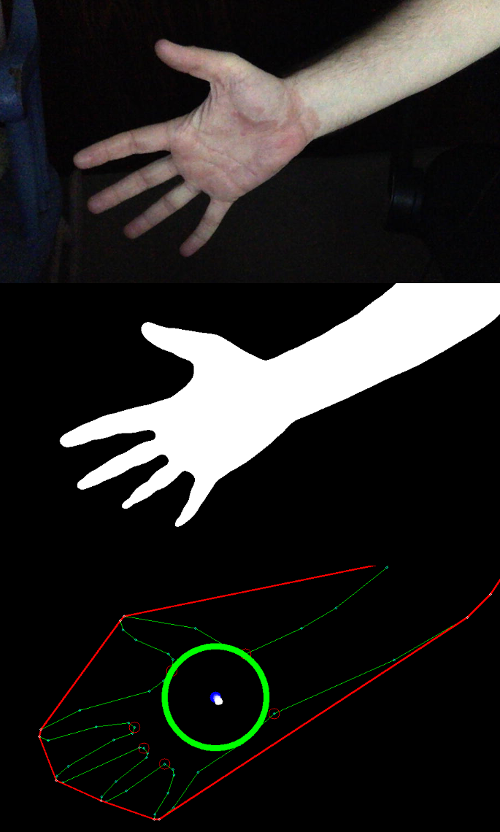

After ironing out the bugs from updating, I ran the code and was amazed by the results. Below is an example of what I was able to capture with my webcam facing my flat hand.

The results were great! but there’s a catch, It only works well in very specific conditions. For example, practically any light throws the entire process out of whack.

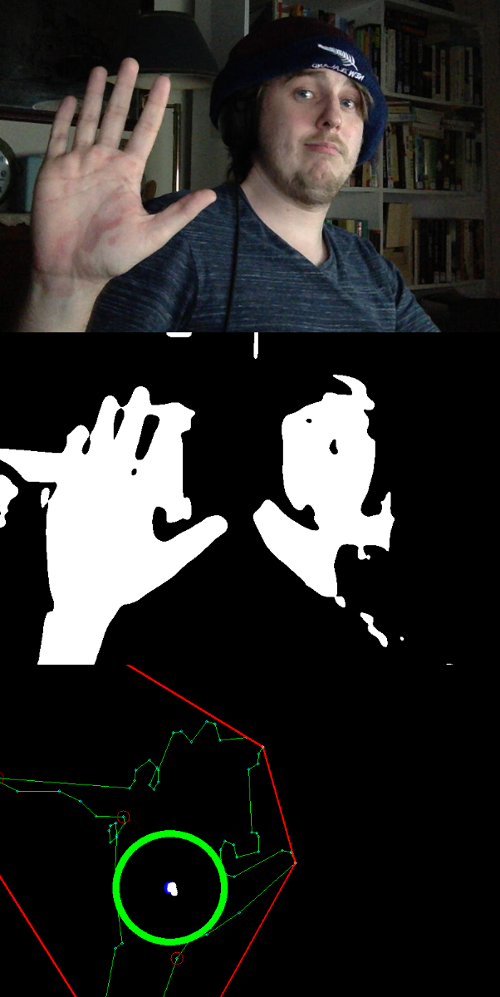

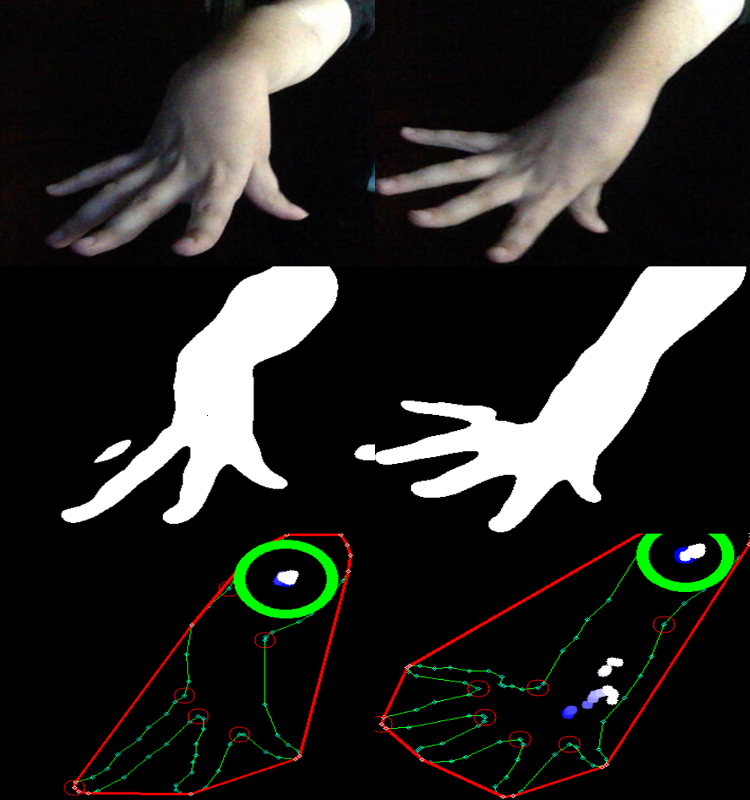

The final thing I tested was how it handled by Partners hand. She is living with cerebral palsy and more specifically deals with flexion, or abnormal bending at the wrist or of the fingers, due to muscle spasticity on a daily basis. This means her hand won’t always be in a shape that is easily analysed, so I really wanted to know how the current algorithm handle the hand matching.

The results of the test were very interesting as it looks like the center point of the hand isn’t calculated correctly due to the abrupt direction particular parts of the hand make. I do have the advantage of being able to utilize a small lego brick style censor that will be placed on the hand during 3D capture. So hopefully I’ll be able to use this as a easy to identify point of reference.

Conclusion

At this stage I’m really happy with the potential this API will offer. Huge thanks to Vasu Agrawal again, seriously awesome work. I’m managing my own fork of the code base here that anyone can contribute to if they want.

Plans for next time will be augment my own ideas with the GestureApi core library and also make sure I can use a small lego-like block as a reference point (haar classification will probably be the way I go).

References

Spastic cerebral palsy - https://www.cerebralpalsy.org.au/what-is-cerebral-palsy/types-of-cerebral-palsy/spastic-cerebral-palsy/

GestureDetection API - https://github.com/VasuAgrawal/GestureDetection

Twitter Facebook Google+